Full disclosure: Microsoft (where I work) has invested $10B in OpenAI, which runs ChatGPT. I have no connection with anything having to do with that!

Attention to AI has reached a fever pitch. As rumors of a more advanced version of ChatGPT that could make it indistinguishable from a human arise, the world continues rapt by its new favorite toy. For me, checking Bing’s integration of Generative AI for image generation has kept me busy with interesting thought exercises.

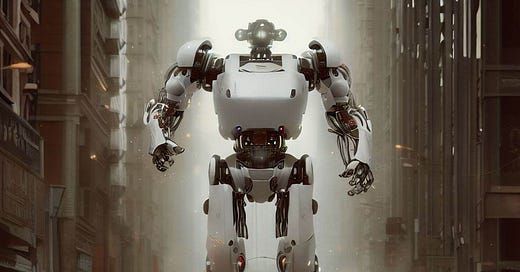

In fact, for the title picture of this article, the prompt was this:

What would it look like if a crowd of professional white men on a city street tried to stop one giant artificial intelligence?

This brings us to the very hot topic for the week, at least for technologists. Some people, including Elon Musk, Steve Wozniak, Yuval Noah Harari, and others, have signed a letter asking for a six-month pause on more advanced AI development because the risk to humanity is too significant. “Risk” stands out and begs the question: Is this an ESG issue?

Technology is absolutely a material ESG risk and opportunity. As I wrote in ChatESG!, rolling out any AI requires thoughtful analysis of the data used, stakeholder and environmental impacts, accountability models, and more.

The Pause Letter

The letter itself is pretty apocalyptic and simple. The disastrous scenarios are covered through stark questions involving world-ending potentials like disinformation, job loss-inducing automation, creating more AIs than humans, and ultimately losing control of our civilization. There’s a lot in these questions that stoke fear of a hypothetical future.

As with any good argument, at the end is a brief concession that opportunities exist. Again, risks and opportunities.

The letter states the risks are as high as possible. As a result, the moratorium that is being sought in the letter has this condition:

This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.

While the pause letter focuses on undeveloped AI technologies, Italy became the first country to ban ChatGPT, citing digital privacy concerns with other EU regulators investigating if they should.

The letter also states that during this pause (again, a six-month window), AI labs and independent experts should develop a series of development safety protocols with independent third-party verification.

Before concluding with a horrible phrase involving the season ‘fall’ and a literal ‘fall; of civilization that makes me cringe, the letter calls for policy and regulation that drives toward new AI development governance with various recommendations.

From an ESG perspective

Well…the letter isn’t very actionable. I mean, calling for a pause is the opposite of action. It also assumes that there is a well-organized governing body worldwide with influence over governments, regulators, and corporates. That isn’t a thing, as there isn’t a COP for technology. However, there is work to build on without requiring to stop development. There are several organizations across the Rome Call, The Partnership for AI (which OpenAI has signed), the Montreal AI Ethics Institute, Distributed AI Research Institute, and not to mention public statements on Responsible AI from Microsoft and Google.

Still, the letter misses the universe of existing problems with AI that these organizations are attempting to address, which is a wide criticism. These issues harm people today and can perpetuate social injustice while being material issues for companies. Responsible AI practices, a well-researched topic, and accountability are critical to adopt.

And so we have a thoughtful statement about the pause letter from the four authors of the AI Ethics paper On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?: Timnit Gebru (DAIR), Emily M. Bender (University of Washington), Angelina McMillan-Major (University of Washington), Margaret Mitchell (Hugging Face). The paper is cited first in the pause letter, so their response is well worth the read. If you aren’t familiar with the Stochastic Parrots paper, I recommend checking out this article for a summary. Needless to say, these women are leaders in AI and AI Ethics.

They list three current problems with AI that should be addressed to address problem areas that exist today, summarized here:

Consolidation of benefits to a few on the back of workers and data theft

Synthetic content that can further oppress and create disinformation

Exacerbation of social inequities by the consolidation of power

Before I hear shouts of ‘whataboutism,’ they are right. Each of these is a material consideration for those using AI models. If a company engages in any of these issues, willfully or not, the result could be reputational damage, the rollback of technology products, regulatory infractions, fines, and, most importantly, stakeholder harm.

So, could a moratorium ever work on anything?

Well, stopping progress is hardly going to get us anywhere. If your company operates in a competitive environment, it’s almost a risk not to at least explore AI in some way. Taking a thoughtful approach and layering on accountability (think Governance here) is a better start. Per the statement:

The onus of creating tools that are safe to use should be on the companies that build and deploy generative systems, which means that builders of these systems should be made accountable for the outputs produced by their products.

Internal data scientists and developers need the time to consider the issues and be empowered by leadership to speak out and adjust when something is a risk. From here, accountability can be assigned and may even be gladly accepted. After all, no one wants to have to pull back a project.

What should corporates do?

While the pause letter made many headlines last week, I’m not sure AI model development will pause. So if you are a corporate, don’t mistake the pause being asked for here with a recommendation for you to pause. Instead, as the pause letter makes the news cycle and AI continues to garner attention, I would recommend finding and adopting Responsible AI practices, but take it a little further:

Have open conversations between business unit leads, product managers, and developers about AI technologies in use at the company and the protections, processes, and controls around them.

Ensure you understand your stakeholders and the impact AI has, even beyond your immediate stakeholders. Don’t forget to consider invisible stakeholders in your value chain that are impacted.

Develop an understanding of your externalities around AI, like the environmental impact of model training and use or the universe of existing cognitive biases.

Listen to the experts in the field, follow existing guidance, and participate in working groups and research where you can.

Another good idea is to build transparency, explainability, and feedback checks into the model development. The EU and the US are working toward AI regulations, and thinking about these issues now is quality Governance and may give you a head start.

It’s also important to remember that many start with people, like the issues that plague technology today. There isn’t some massive technology AI boogeyman out there coming to end the planet. Like with any ESG risk or opportunity, it’s essential to look at the problem from several angles and make the best decision with the available information. Be careful and be responsible.

Resources

We Should Consider ChatGPT Signal For Manhattan Project 2.0 (forbes.com)

AI Isn’t Omnipotent. It’s Janky. The Atlantic

The AI moratorium open letter has a longtermist problem | Popular Science (popsci.com)